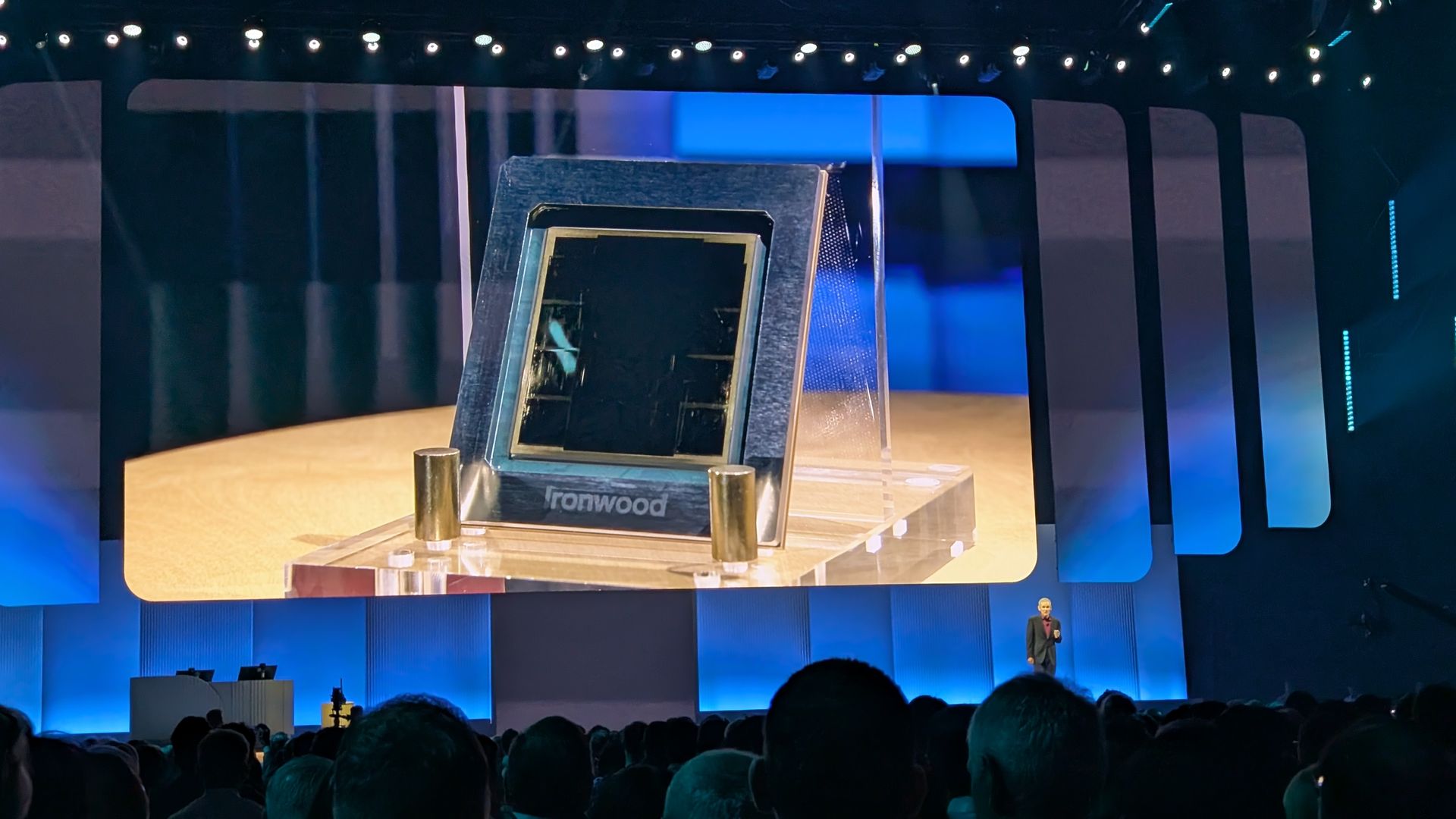

- Google introduces Ironwood, their 7th-gen Tensor Processing Unit (TPU).

- Ironwood is tailored for inference, which presents the latest major hurdle for artificial intelligence.

- It provides significant improvements in performance and efficiency, and even surpasses the capabilities of the El Capitan supercomputer.

Google has unveiled its most advanced AI training hardware yet as it aims to make significant progress in inference capabilities.

The Ironwood is the firm's seventh-generation Tensor Processing Unit (TPU), which serves as the hardware enabling both Google Cloud operations and the AI training processes for its clients' workloads.

The hardware was unveiled at the firm's event. Google Cloud Next 25 The event held in Las Vegas emphasized significant improvements in efficiency. These advancements suggest that workloads could be executed at a lower cost as well.

Google Ironwood TPU

The firm indicates that Ironwood represents "a considerable change" in AI advancement, transitioning from reactive AI models that merely provide current data for users to analyze, to more advanced systems capable of interpreting and drawing conclusions independently.

Google Cloud thinks this represents the future of AI computing, enabling its most rigorous clients to configure and support increasingly substantial tasks.

At its highest configuration, Ironwood has the capability to expand up to 9,216 chips per pod, offering a combined performance of 42.5 exaflops – this exceeds twenty-four times the computational capacity of El Capitan, currently the world's biggest supercomputer, .

Every single chip provides a maximum computing power of 4,614 teraflops, which the company claims represents a significant advancement in terms of both capacity and performance—even when considering the more modest setup consisting of only 256 chips.

Nevertheless, the scale can be expanded further since Ironwood enables developers to use the company’s DeepMind-created Pathways software suite to leverage the aggregated computational capacity of up to thousands upon thousands of Ironwood TPUs.

Ironwood provides a significant boost in high-bandwidth memory capacity (up to 192GB per chip, which is six times more than the preceding Trillium sixth-generation TPU). Additionally, Ironwood can achieve a bandwidth of 7.2TBps, representing an enhancement four-and-a-half times over Trillium’s capability.

"Amina Vahdat, VP/GM of Machine Learning, Systems, and Cloud AI at Google, highlighted that for over ten years, Tensor Processing Units have been driving the company’s most intensive AI training and serving tasks, as well as allowing our Cloud clients to achieve this too," she stated.

Ironwood represents our most advanced, versatile, and energy-efficient TPU to date. It is specifically designed to drive large-scale inferential AI models.

You might also like

- The new Blackwell Ultra GPU line from Nvidia represents their most potent AI hardware to date.

- We've rounded up the best workstations available for your most significant projects

- Traveling? Here are the best mobile workstations on offer

If you enjoyed this article, click the +Follow button at the top of the page to stay updated with similar stories from MSN.