According to research from the NYU Tandon School of Engineering, a novel method for streaming tech could greatly enhance user interaction with virtual reality and augmented reality settings.

The research—presented in a paper At the 16th ACM Multimedia Systems Conference ( ACM MMSys 2025 On April 1, 2025—a date noted for a specific advancement—the document outlines a technique aimed at directly forecasting observable elements within immersive three-dimensional spaces. This approach could theoretically cut down bandwidth usage by as much as seven times without compromising the visual experience.

The technology is currently being utilized in an ongoing NYU Tandon initiative aimed at integrating point cloud video into dance education, enabling 3D dance lessons to be streamed on regular devices with reduced bandwidth needs.

The primary difficulty associated with delivering immersive content via streams lies in the enormous volume of data needed, " stated Yong Liu—a professor within the Electrical and Computer Engineering Department (ECE) at NYU Tandon and also part of NYU Tandon's Center for Advanced Technology in Telecommunications (CATT), along with being a faculty member at NYU WIRELESS—who headed up the research group.

The conventional method of video streaming transmits all elements within a frame. In contrast, this innovative technique operates more akin to tracking your gaze as you move about a space—processing only the portions you are currently observing.

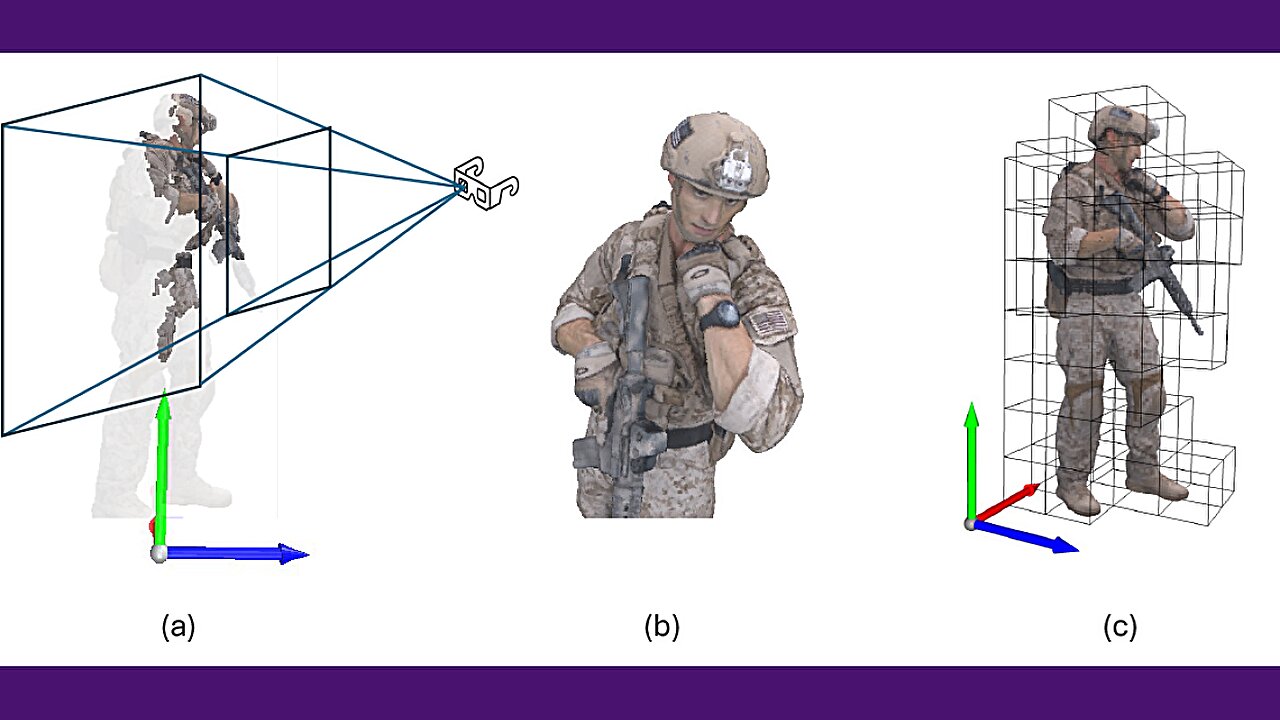

This technology tackles the "Field-of-View (FoV)" issue for immersive experiences. Present AR/VR apps require substantial bandwidth; a single frame of point cloud video—which represents 3D environments using millions of spatial data points—demands over 120 megabits per second, which is roughly tenfold the rate needed for regular HD videos.

In contrast to conventional methods that initially forecast where a user might focus their attention before determining what is visible, this innovative technique directly assesses content visibility within the 3D environment. By bypassing the sequential steps of location prediction followed by visibility calculation, this method minimizes errors and enhances the precision of predictions.

The system segments three-dimensional space into individual “cells” and considers each of these cells as nodes within a graph network. By employing transformer-based graph neural networks, it captures the spatial connections among adjacent cells. Additionally, it utilizes recurrent neural networks to examine the changes in visibility patterns over time.

In the case of pre-recorded virtual reality content, the system anticipates what will be seen by the user up to 2-5 seconds in advance. This represents a notable enhancement compared to earlier systems which were capable of forecasting the field of view with precision merely fractions of a second beforehand.

The intriguing aspect of this work lies in its time frame," remarked Liu. "Earlier systems were capable of precisely forecasting what a user would view just a short moment ahead. This group has significantly expanded upon that capability.

The methodology employed by the research group cuts down prediction inaccuracies by as much as 50% when contrasted with current techniques for extended forecasts, all while sustaining real-time performance at a rate exceeding 30 frames per second—even for point cloud videos containing upwards of 1 million points.

For consumers, this could mean more responsive AR/VR experiences with reduced data usage, while developers can create more complex environments without requiring ultra-fast internet connections.

Liu mentioned that they are observing a shift wherein AR/VR technology is evolving from niche uses towards becoming mainstream for both leisure activities and routine work instruments. He pointed out that bandwidth limitations have hindered progress, but this study aids in overcoming those constraints.

The researchers have released their code to support continued development.

More information: Chen Li and colleagues explored Spatial Visibility and Temporal Dynamics: Reevaluating Field of View Prediction in Adaptive Point Cloud Video Streaming. Proceedings of the 16th ACM Multimedia Systems Conference (2025). DOI: 10.1145/3712676.3714435

Furnished by NYU Tandon School of Engineering

The tale was initially released on Tech Xplore . Subscribe to our newsletter For the most recent science and technology news updates.