For countless deaf and hearing-impaired people globally, daily exchanges can be fraught with obstacles due to communication hurdles. Conventional aids such as sign language translators tend to be limited in supply, costly, and reliant on personnel schedules. As we move further into the digital age, there’s a rising call for intelligent support systems that provide instant, precise, and easy-to-access communication tools, designed specifically to overcome these significant challenges.

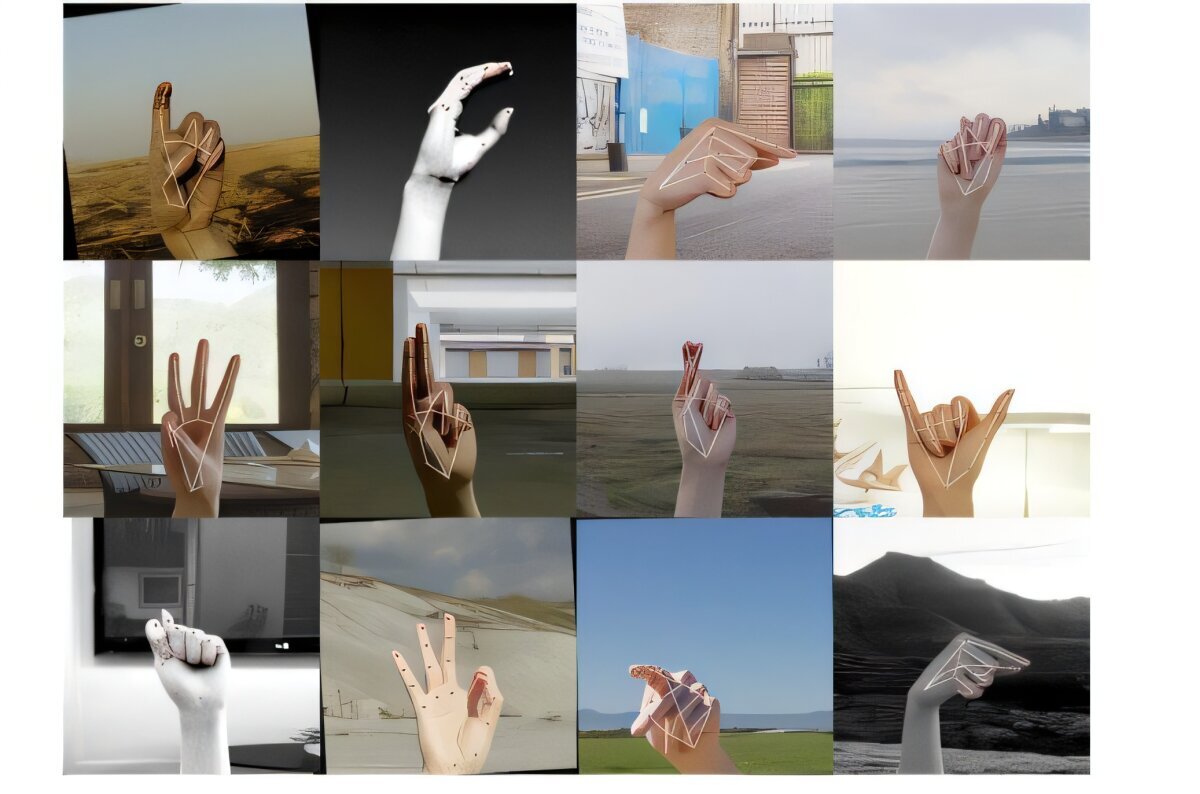

One of the most commonly utilized sign languages is American Sign Language (ASL), which employs specific hand movements to signify letters, words, and expressions. Current ASL recognition technologies frequently face challenges related to real-time functionality, precision, and adaptability in various settings.

One of the primary challenges with ASL systems is accurately differentiating between visually similar signs like “A” and “T,” or “M” and “N.” This frequently causes errors in classification. Moreover, the datasets used come with substantial hurdles due to issues such as low-resolution images, motion blurring, uneven lighting conditions, and differences in hand size, skin color, and background settings. Such problems lead to biased data and limit the system’s capability to perform consistently for various users under differing circumstances.

To address these issues, scientists from the College of Engineering and Computer Science at Florida Atlantic University have created a cutting-edge real-time American Sign Language (ASL) translation system. By integrating YOLOv11’s robust object detection capabilities with MediaPipe’s accurate hand-tracking technology, this system can effectively identify ASL alphabetic signs instantaneously. Utilizing sophisticated deep-learning techniques along with detailed tracing of crucial hand points, it converts ASL motions into written text, allowing users to precisely spell out names, places, and much more through interactive engagement.

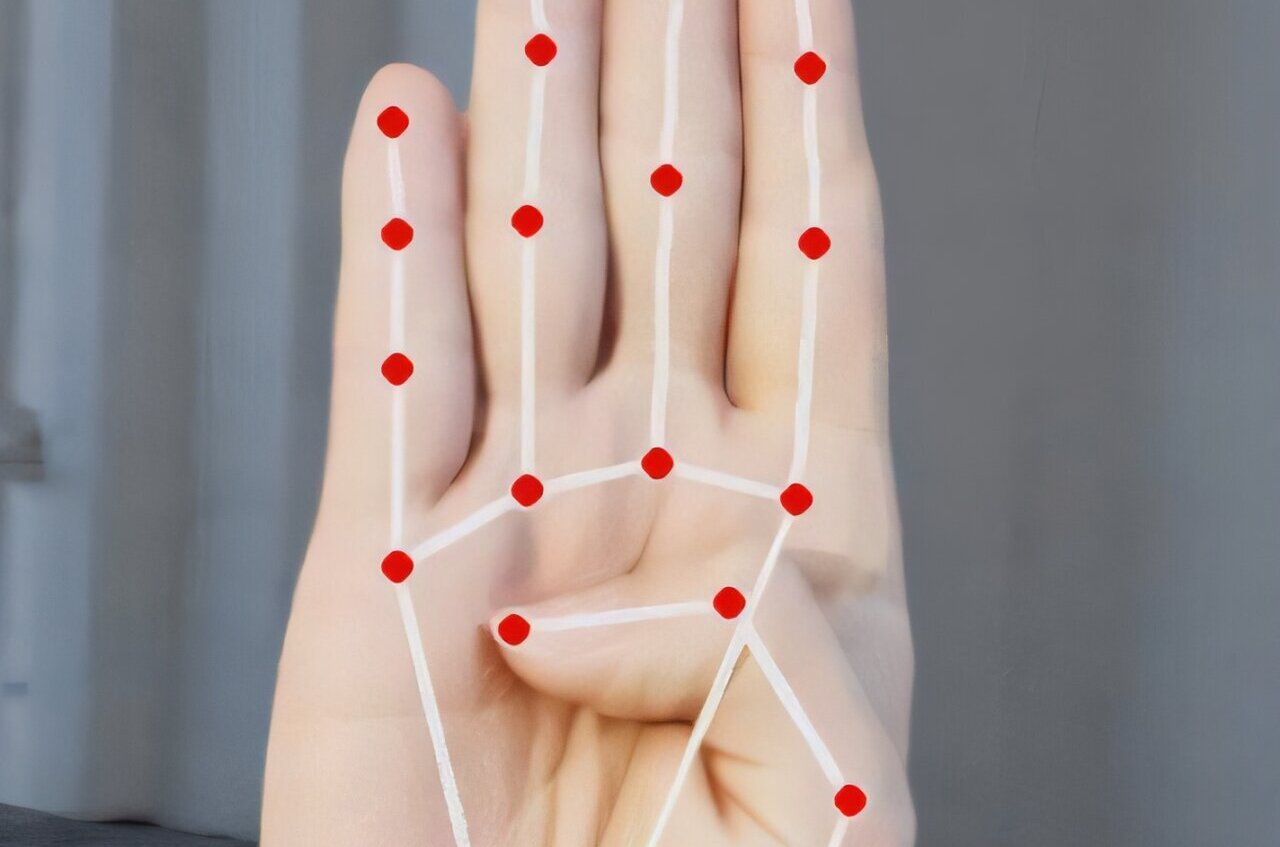

Essentially, an integrated webcam functions as a touchless sensor, gathering real-time visual information that gets transformed into digital images for analyzing gestures. MediaPipe maps out 21 key points on every hand to generate a skeleton-like diagram, whereas YOLOv11 utilizes these points to recognize and categorize American Sign Language letters with remarkable accuracy.

"An important feature of this system is that the complete recognition process—from capturing gestures to categorizing them—is performed smoothly in real-time, irrespective of changing light settings or background environments," explained Bader Alsharif, who is both the lead author and a PhD student at Florida Atlantic University’s Department of Electrical Engineering and Computer Science.

All of these capabilities are realized with commonly available hardware components. This highlights the system’s practical potential as an easily accessible and expandable assistive technology, thereby presenting a feasible option for actual use cases.

The findings of the research, featured in the journal, are as follows: Sensors , verify the efficiency of the system, which attained a 98.2% accuracy rate (mean Average Precision, mAP@0.5) without significant delay. This outcome underscores the system’s capability to provide top-tier precision in real time, positioning it as an excellent choice for scenarios demanding swift and dependable operation, like live video analysis and interactive systems.

The ASL Alphabet Hand Gesture Dataset contains 130,000 images featuring an extensive array of hand gestures recorded across multiple scenarios. This diversity aids in improving model generalization. The dataset encompasses varied lighting situations such as bright light, low illumination, and shadows, along with differing backdrops including both outdoor and indoor settings. Additionally, numerous hand positions and rotations are included to enhance reliability.

Every image comes with precise labeling using 21 key points that emphasize crucial hand features like finger tips, knuckles, and the wrist. This detailed annotation creates a bone structure outline for the hand, enabling models to differentiate among comparable motions with remarkable precision.

"This initiative beautifully illustrates the potential of advanced artificial intelligence in benefiting mankind," stated Dr. Imad Mahgoub, who is also the co-author and holds the position of Tecore Professor within the FAU Department of Electrical Engineering and Computer Science.

Through combining deep learning techniques with hand landmark detection, our group developed a system that attains both high precision and user-friendly accessibility for common usage. This represents a significant advancement towards more inclusive communication technology solutions.

Approximately 11 million people, which constitutes 3.6% of the total population in the U.S., are deaf. Furthermore, around 15%, or equivalently 37.5 million, of American adults encounter some form of hearing challenges.

The importance of this study hinges on its capacity to revolutionize communication within the deaf community through an AI-powered device designed to convert American Sign Language movements into written text. This innovation facilitates more fluid exchanges in various contexts such as education, work environments, healthcare facilities, and social gatherings,” stated Dr. Mohammad Ilyas, who is both a co-author and a faculty member in the FAU Department of Electrical Engineering and Computer Science.

Through the creation of a comprehensive and user-friendly ASL interpretation system, our research aids in the progress of assistive technology aimed at removing obstacles for individuals who are deaf or have difficulty hearing.

Future efforts will concentrate on enhancing the system’s abilities to move beyond identifying single ASL letters towards comprehending complete ASL sentences. Such an advancement could facilitate smoother interactions, permitting users to effortlessly express comprehensive ideas and expressions.

Stella Batalama, Ph.D., dean of the College of Engineering and Computer Science, stated, “This study underscores the significant impact of artificial intelligence-powered support tools in strengthening the capabilities within the deaf community.” She further noted, “Through instant American Sign Language identification, this technology significantly contributes to building a more equitable society by closing the communication divide."

This enables people who have trouble hearing to connect more easily with the world surrounding them, be it when they're presenting themselves, moving through different spaces, or just chatting normally. Such advancements improve access significantly and promote deeper social inclusion, fostering a more united and compassionate society for all.

The study was co-authored by Easa Alalwany, Ph.D., who recently earned his doctorate from the FAU College of Engineering and Computer Science and now serves as an assistant professor at Taibah University in Saudi Arabia, along with Ali Ibrahim, Ph.D., another alumnus of the same college’s doctoral program.

More information: Bader Alsharif et al., Real-Time Translation of American Sign Language Utilizing Deep Learning and Keypoint Tracking, Sensors (2025). DOI: 10.3390/s25072138

Supplied by Florida Atlantic University

The tale was initially released on Tech Xplore . Subscribe to our newsletter For the most recent science and technology news updates.